Imagine coming to an overcrowded fast food restaurant to order the best taco in the country and there’s only one cook working there. He prepares each taco one by one. Although the cook has an extra time while waiting until a tortilla heats up, he doesn’t start preparing another order until the first one is finished.

That’s your software without concurrency and parallelism.

But what are the differences between those two? How are they handled in Elixir? Let’s start from the beginning.

Concurrency vs parallelism

In order to better understand the difference, let’s take a closer look at the above mentioned restaurant problem.

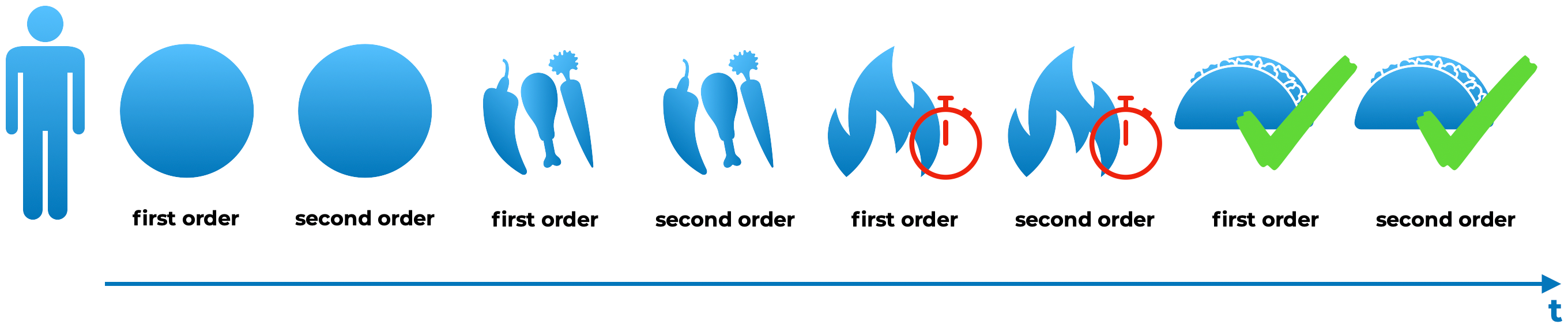

Imagine that the cook has just received orders for two tacos. So far, he’s prepared each order separately which is preparing the first taco from the beginning to the end and then the same with the second one. However, he decides to do it at the same time. Steps that he’s taking look as follows:

- The cook’s preparing a tortilla for the first order,

- The cook’s preparing a tortilla for the second order,

- For the first order, the cook’s putting vegetables and chicken on a tortilla,

- For the second order, the cook’s putting vegetables and chicken on a tortilla,

- The cook’s putting a taco from the first order into the cooker to heat it up,

- The cook’s putting a taco from the second order into the cooker to heat it up,

- The taco from the first order is ready to serve,

- The taco from the second order is ready to serve.

The action above represents a concurrent execution. The cook was preparing two orders at the same time, but in fact, he was switching back and forth between each process (preparing).

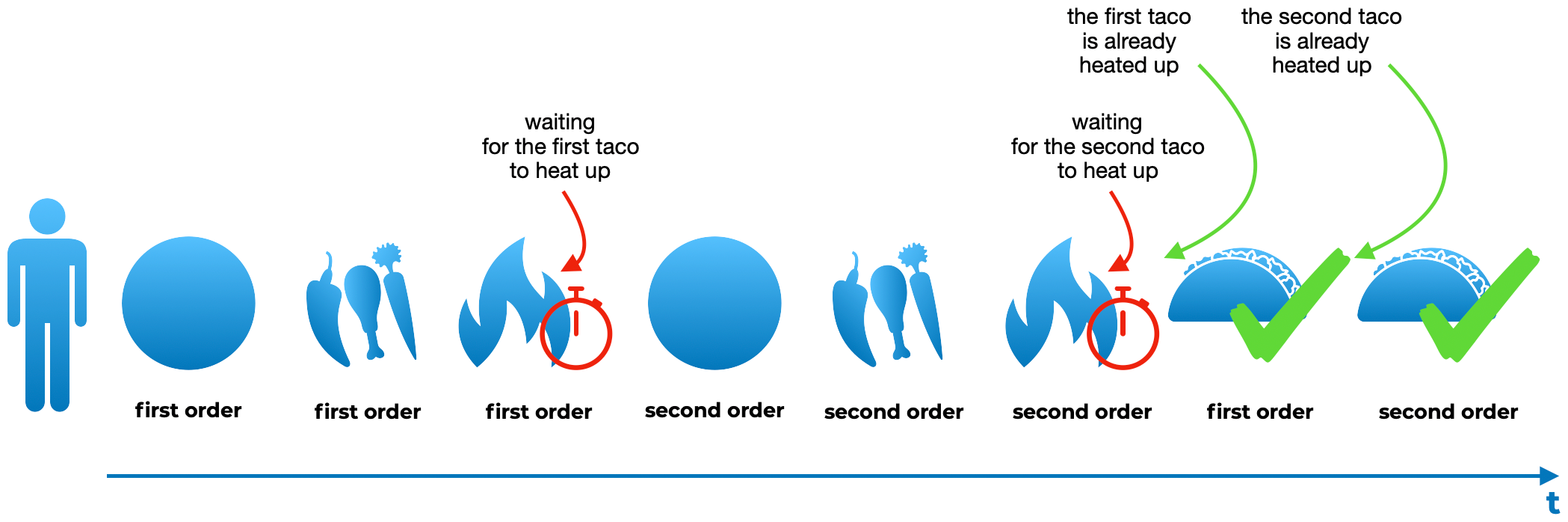

We could wonder what the time difference between such an approach and preparing each order separately is. Imagine that before the cook serves a taco, he needs to wait until it heats up. It’d be a waste of time to wait doing nothing at that time.

Concurrency lets us start preparing another order while the first taco is heating up.

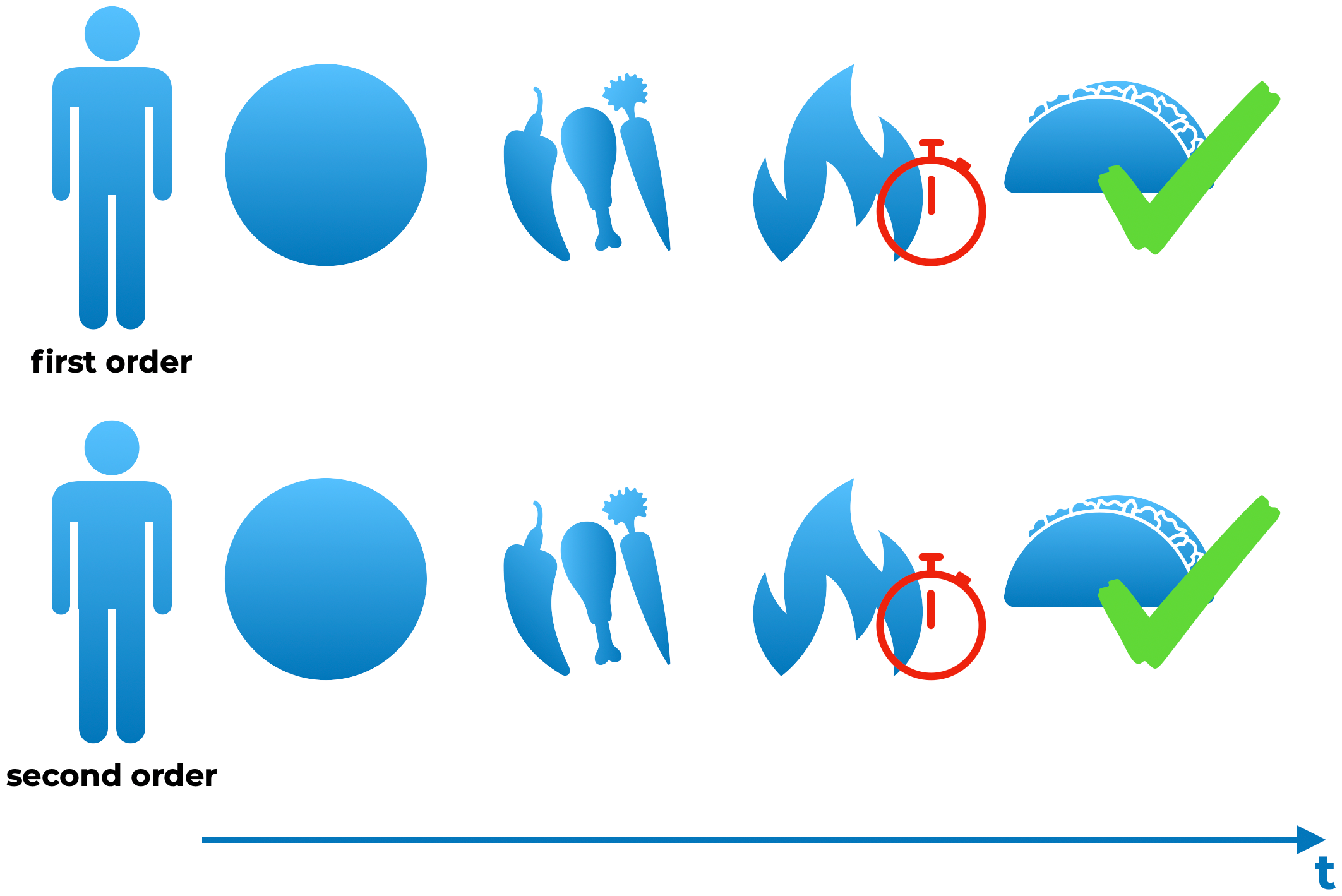

What if the restaurant hired another cook for help? Then they would prepare tacos together. The main cook would prepare the first order and the newly hired employee would prepare the second order. Finally the process of preparing tacos would be finished twice as fast.

Such an approach, when the system is able to handle two or more processes exactly at the same time, is called parallel processing.

Note, you need at least two CPU cores to take advantage of the parallelism.

To provide the highest performance, you can use both parallelism and concurrency at once.

So how exactly does BEAM work?

Elixir is built on top of the Erlang Virtual Machine, also called BEAM (Bogdan’s Erlang Abstract Machine) and along with its Phoenix framework have all the benefits of Erlang. It means that we can create systems with a high concurrency and parallel processing which significantly influence their performance.

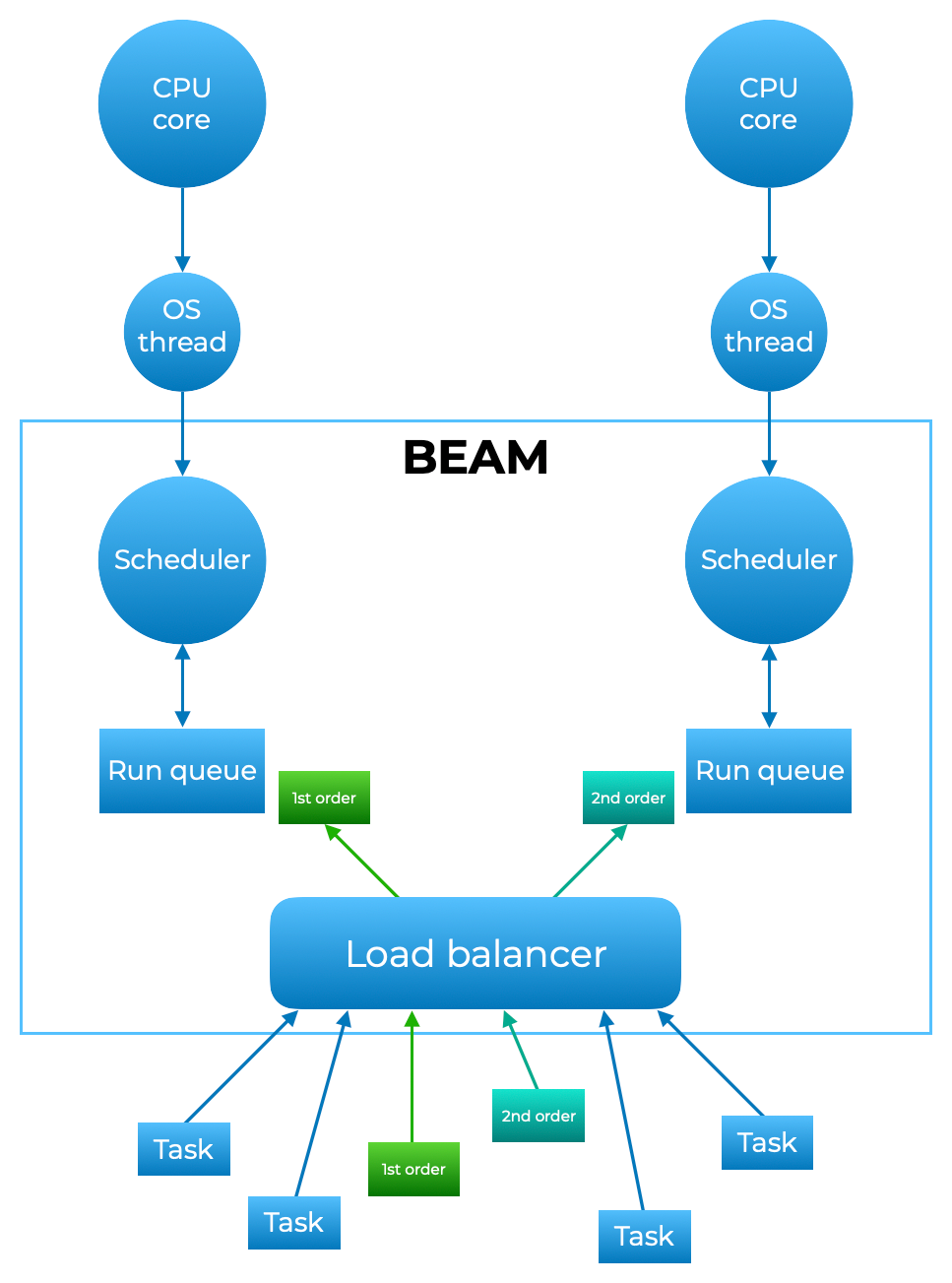

BEAM uses exactly one Operating System Thread from each processor’s core. On each thread, Erlang VM (I’ll use it interchangeably with BEAM) runs its own scheduler. In turn, each scheduler has its own run queue. A run queue contains awaiting processes which are pulled by the scheduler so they can be run.

Data is not shared between queues. There are no locks on data to avoid dirty reads/writes as each scheduler has its own, independent run queue. That’s why all the OS threads can be used all the time. Schedulers don’t have delays due to slowly running processes.

When it comes to processes, they are not typical Operating System ones. They are Erlang processes which are lightweight (a newly spawned one uses 309 words of memory) compared to the threads and processes in OS. What’s more, each process leaves a small memory footprint, is fast to create and be terminated, and scheduling overhead is low.

BEAM is also responsible for distributing processes between run queues on the separate cores. It’s done by BEAM’s Load Balancer. The load balancer has its two techniques for balancing the incoming load: task stealing and migration. Using this logic, it takes tasks away from the run queues which are overloaded and migrates them to the ones with free resources.

BEAM’s Load Balancer main purpose is to keep a high number of running processes allocated equally between each scheduler. It tries to use as few schedulers as possible so that none of the CPUs are overloaded.

Summing up

When building a fault tolerant and concurrent system, we should be aware of its possibilities. The knowledge about how the Erlang VM works can help us understand and solve everyday problems. What’s more, it gives us a chance to seize all its features to build reliable applications.

To get more details about how Erlang works, read the book entitled “The Erlang Runtime System” written by Erik Stenman or visit erlang.org to get acquainted with its documentation.

Originally published at https://appunite.com on Aug 25, 2020.